I will start by explaining what Positive Pay is (for

those who know, you can skip this part). It is a popular Service in North American

banks that they provide to prevent fraud on printed checks that you issue. You

issue / print a check to pay a Vendor and you mail it via regular Post mail. At

the same time, usually by the end of the day, you will produce a file as per

your bank specifications for this service with all the issued checks list with

date, amount and beneficiary and send it to your bank.

Once your issued check

is presented to be paid by your Vendor at the bank, they cross ref it against

the info you have provided them. If any of the data does not match, they will

stop the payment of that check and send you an exception notice (online). Then you will decide if the check gets paid or not. This way you can

prevent check fraud by someone that could have altered any information in the check

(usually amount and/or beneficiary) to get away with it. If you do not have

that service, your bank could easily end up paying your check, leaving you on

the hook to absorb the fraud.

A few years ago, a client of mine, had a check altered. The

mail somehow was intercepted, they copied all the info in the check into a

brand new check and a $450 CAD check got converted into $23k CAD check. The bank end

up paying it. Luckily, for my client, we also had Electronic Bank Statement

(EBS) in place and the next day the check was paid. We were trying to reconcile

it, the system could not find that check number with that amount. Then we

started investigating in our system and found out that check number was for

$450 instead of $23k. They call right away the Bank and thanks God the bank

recognized it and absorbed the hit. In this case, Positive Pay would have been ideal

to directly stop it from being paid.

How we used to do Positive Pay in SAP before S/4 HANA 1709 ?

Standard SAP Tcode FCHX (Program RFCHKE00) is designed to

output a file that provides this exact information (to a certain point) so you

can send it to your bank. But …. There is always a but. Each and every bank has

its own set of requirements when it comes to Positive Pay and the file

specifications vary from Bank to bank, they are not standard like EBS or electronic

payments. So this program will not work out of the box for you. If it does, you should buy a lottery ticket !

This program works with 2 internal Structures DTACHKH (Header)

and DTACHKP (Line items / checks) where all the “necessary” information is

output. I put necessary between quotes, because in some cases your bank might

ask you for some extra info.

There were a couple of alternatives to solve this until today:

#1 – Take a copy of this program and make a Z Transaction (this

is the more popular one and I have done it a couple of times already). You will

also copy these 2 structures and make them Z. Then once you have a copied

program, you can control the info you need, the file format, the logic, etc. That

way you will output a file as per your bank specifications. If you were to need

this for several banks, you will do as many Z programs as banks you have. Or

you can enhance if further to have some sort of bank selection so you control

different output file formats all in one program.

#2 – Modify the standard delivered structures (DTACHKH and

DTACHKP) to include or remove what you need. This does not give you a lot of flexibility

and you will be limited to only 1 bank. If in the future you have another bank,

this approach will not work.

#3 – Do a full custom program to create your own extract and

build your file as per your bank needs. At the end, all the information

is somehow stored in Tables REGUP and REGUH. It is more work as you have to

build a program from scratch, a selection screen, file handle, etc. In a sense

is what FCHX already does. I have seen this approach being suggested by people in

Internet. (Not the one I will go with).

#4 – Use FCHX the way it is out of the box, let it create

the file. Then create a 2nd program that will grab this file and

format it (and enhance if needed) the way you need it for your bank. (An old

client of mine did it this way).

#5 – Do the same as option #4 but transform your file in

your middleware directly and then send it to the bank directly from there.

As you can see there are many options with pros/cons and

more or less effort.

My preferred option was always #1, up until I recently

discovered a new SAP standard delivered way that comes with S/4 HANA 1709 (FPS02)

which is the environment that I was working with.

How do we do Positive Pay in SAP S/4 HANA 1709 today ?

Way simpler, quicker and less custom (Z) than it used to be ….

Now Standard SAP Tcode FCHX (Program RFCHKE00) allows you to

work with a DMEE structure so you can design and output your custom file as per

your bank’s needs without the need for an Abap, program or structure modifications.

You just design your output file with the Tcode DMEEX (Extended DMEE) and then

you can output the file.

As you can see in the screen shoot below, FCHX has a line at

the bottom where you can specify your “Payment Medium format”. This last line /

option, was not there before in previous versions, like in ECC6.0. Now as of

1709 you have this possibility.

Then when it comes to build your output file, you work it

with DMEEX (Extended, not the traditional DMEE. But it is almost the same) the same way as if you were building any other electronic payment

file to your bank. But instead of this file being called by your Payment

Program (F110), it will be called by FCHX.

Once you have fill out the corresponding info in your

selection screen file, you will execute it and a file will be created. (see confirmation

message below)

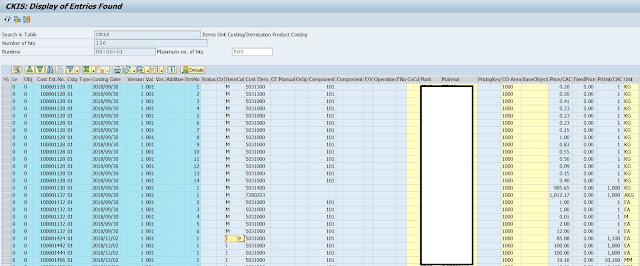

Now … Where is that file going ? It is being created in

TemSe or Tcode FDTA (Fiori App “Manage Payment Media”).

Then you go there, enter your selection criteria and execute.

Once you are here, you select the line and download the file

as you might already be doing with any other electronic payment file.

In this case I created a custom DMEE called “ZBOA_US_POSIPAY”

as per my Bank specifications. In this case Bank of America.

With S/4 HANA 1709, there is a standard delivered DMEEX

format for Positive Pay that you can use as an starting point to create your

own custom DMEE. This DMEE is called “US_POSIPAY”. You will copy it and create

your own Z Format. You can create as many custom DMEEs formats as banks you

have. This way you do not need to change your FCHX program. You only need to change

the “Payment Medium format” in the execution screen. This way you stay standard

and there is no issues when it comes to an upgrade.

This saves you the need to do Abap, and you are completely

independent as any Functional FICO consultant with the right knowledge can do

it. It does not require development anymore.

Note: FCHX out of the box has an issue to work with any

other DMEE other than standard delivered US_POSIPAY and requires you to implement

an OSS Note to correct this bug. Probably I was one of the firsts in the world

to have attempted to use this solution as it was not working and SAP did not

know about it. I ended up reporting it to SAP via an OSS Message. It took me

around 2 months of exchange with them before they finally ended up releasing

this OSS Note. After that it works well. So now I am able to say that there is an

OSS Note out there that was released thanks to me.

This Note applies to S4CORE 102 (1709), 103 (1809), 104 (1909).

OSS Note

If your Company and/or Project needs to implement this, or any of the functionalities described in my Blog, or advise about them, do not hesitate to reach out to me and I will be happy to provide you my services.